In statistical modeling, maximum likelihood estimation is a concept which applies to the practice of estimating the parameters of a model. The maximum likelihood estimation is based from the likelihood function, which is a function of unknown parameters (1).

The likelihood function encompasses the idea of how for any function, the outcome variable of a statistical model is distributed to an unknown parameter. This unknown parameter is defined as theta (θ). Furthermore, this outcome variable can either be discrete in its distribution, resembling the probability function, or continuous in its distribution, analogous to the probability density function (1). The likelihood function is utilized in trying to estimate this unknown parameter θ.

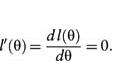

With maximum likelihood estimation, what is being calculated is the maximum likelihood estimate or MLE, which is representative of the parameter value where there is the maximal probability according to the observed data (1). The MLE can be calculated using a logarithmic function. In this case, θ is calculated by determining the derivate of the log likelihood function and equaling the function to 0 (1):

By solving for θ, the maximum likelihood function is being maximized. When given a data set of certain points, it is assumed that these random variables follow some distribution. With maximum likelihood estimation, the goal is to try and estimate the truest value of theta, which represents where the data comes from.

The logarithmic function is utilized because it is easier to work with sums rather than the products.

References:

© BrainMass Inc. brainmass.com July 25, 2024, 1:09 pm ad1c9bdddf